Boston Dynamics & DeepMind: AI-Powered Robots Transform Development

Explore how Boston Dynamics and DeepMind's AI partnership unlocks multimodal robotics for developers, enabling smart APIs, automated workflows, and futuristic automation.

Boston Dynamics + DeepMind: The AI Power‑Couple That Could Make Robots Do Your Laundry (and Then Some)

Boston Dynamics and Google DeepMind just announced a joint AI partnership at CES 2026. The collaboration promises to fuse Boston’s “athletic intelligence” with DeepMind’s Gemini Robotics foundation models, opening a new chapter for humanoid robots in industry, research, and maybe even your living room.

Why This Partnership Matters for Developers, Not Just CEOs

If you’ve ever watched a Spot robot trot across a warehouse or an Atlas flip a box, you’ve seen the physical side of Boston Dynamics’ magic. What you haven’t seen yet is the brain that will finally let those machines think the way we do—interpret language, reason about tools, and adapt on the fly.

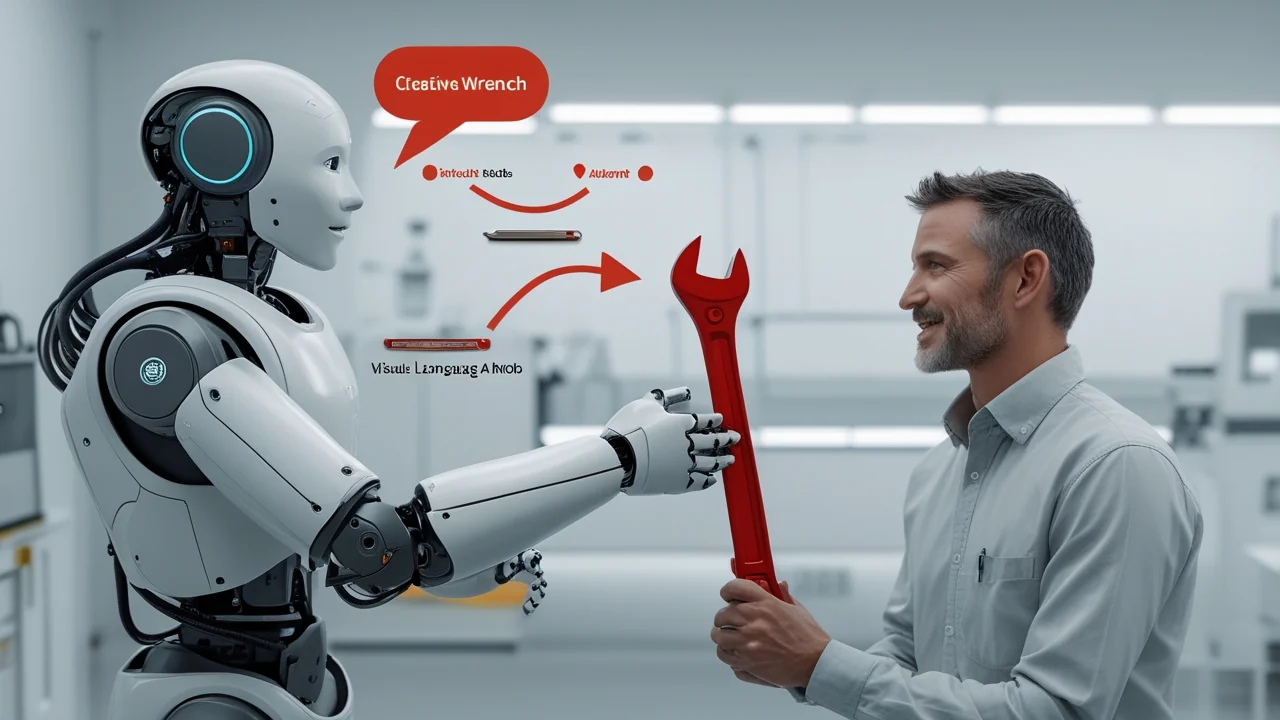

DeepMind’s Gemini Robotics models are multimodal foundation models that can ingest video, audio, and text, then output visual‑language‑action plans. Pair that with Atlas’s 12 DOF of balance and you get a robot that could, in theory, understand a command like “hand me the red wrench” and actually fetch it without a pre‑programmed script.

For a web‑development agency, this isn’t just sci‑fi fluff. It means new APIs, fresh data pipelines, and a whole ecosystem of AI‑powered robotics services you can embed into client solutions—think automated assembly lines, smart inventory bots, or even interactive museum guides.

Below we’ll break down the technical nuts and bolts, explore real‑world use cases, share some code snippets to get you started, and warn you about the pitfalls that can turn a shiny robot demo into a costly flop.

The Core Ingredients: Gemini Robotics + Atlas

| Component | What It Does | Why It’s Cool |

|---|---|---|

| Gemini Robotics foundation model | Large‑scale multimodal model trained on video, text, and sensor data. Generates visual‑language‑action (VLA) plans. | One model can power robots of any shape—no need to train a separate network for each platform. |

| Atlas humanoid | 1.5 m tall, 80 kg, 12 DOF limbs, force‑feedback sensors, and a built‑in perception stack. | Capable of parkour‑level agility; now getting a brain that can reason about that agility. |

| Joint research pipeline | Real‑time streaming of sensor data → Gemini inference → low‑latency motor commands. | Enables closed‑loop perception‑action loops at ~30 Hz, crucial for dynamic tasks. |

TL;DR: Gemini gives Atlas understanding; Atlas gives Gemini muscle. Together they can learn on the fly, not just follow pre‑written scripts.

A Quick Peek at the API: From Text Prompt to Robot Motion

DeepMind plans to expose Gemini Robotics via a RESTful inference endpoint (think Google Cloud AI Platform). Here’s a minimal Python example that sends a natural‑language command and receives a serialized motion plan:

import requests, json

# Your Gemini endpoint (sandbox URL for illustration)

ENDPOINT = "https://gemini-robotics.googleapis.com/v1/infer"

def get_motion_plan(command: str, camera_feed_url: str):

payload = {

"prompt": command,

"vision_input": {"image_url": camera_feed_url},

"output_format": "motion_plan"

}

headers = {"Authorization": "Bearer YOUR_ACCESS_TOKEN"}

resp = requests.post(ENDPOINT, json=payload, headers=headers)

resp.raise_for_status()

return resp.json()["motion_plan"] # Serialized protobuf or JSON

# Example: ask Atlas to hand you a screwdriver

plan = get_motion_plan(

"Pick up the flat‑head screwdriver from the table and hand it to me.",

"http://robot.local/camera/front.jpg"

)

print(json.dumps(plan, indent=2))The returned motion_plan can be streamed directly to Atlas’s low‑level controller:

import socket

import json

ATLAS_IP = "192.168.0.42"

ATLAS_PORT = 5555

def send_plan_to_atlas(plan):

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

s.connect((ATLAS_IP, ATLAS_PORT))

s.sendall(json.dumps(plan).encode())

send_plan_to_atlas(plan)Pro tip: Keep the round‑trip latency under 100 ms. Anything slower and the robot may start “thinking” like a human—slow and indecisive.

Real‑World Use Cases That Could Go Live This Year

| Industry | Scenario | How Gemini + Atlas Helps |

|---|---|---|

| Automotive manufacturing | Assemble engine blocks, torque bolts, and move heavy parts across the line. | VLA models understand assembly instructions from CAD PDFs and adapt to part variations on the fly. |

| Warehouse logistics | Sort incoming parcels, load pallets, and handle fragile items. | Vision‑language models recognize barcodes and fragile‑item cues, then generate safe grasp trajectories. |

| Construction sites | Carry tools, inspect structural elements, and assist workers at height. | Multimodal reasoning lets the robot ask for clarification (“Do you want the hammer or the drill?”) and comply safely. |

| Healthcare | Deliver medication, fetch medical devices, or assist in patient mobility. | Strict safety constraints baked into the model ensure compliance with ISO 13482 (service robot safety). |

Note: The partnership is still early-stage, so most deployments will start as pilot projects with human‑in‑the‑loop supervision.

Getting Your Hands Dirty: Building a Simple Vision‑Language‑Action Demo

Let’s walk through a tiny demo that runs locally (no robot required). We’ll use a pre‑trained Gemini‑Lite model from DeepMind’s open‑source release to generate a textual plan, then visualize it with matplotlib.

# Install: pip install transformers torch matplotlib

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

import torch, matplotlib.pyplot as plt

model_name = "deepmind/gemini-lite-vision-language-action"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

def generate_plan(image_path, command):

# Encode image + text (simplified)

inputs = tokenizer(command, return_tensors="pt")

# In a real setup you'd also pass pixel values

outputs = model.generate(**inputs, max_length=100)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

plan = generate_plan("sample_table.jpg", "Pick up the blue cup")

print("Generated plan:", plan)

# Visualize (mock)

plt.imshow(plt.imread("sample_table.jpg"))

plt.title("Robot's View: " + plan)

plt.axis("off")

plt.show()The output might read:

Generated plan: Move arm to (x=0.42, y=0.12, z=0.78); close gripper; lift 0.2 m; rotate wrist 45°; bring to user.While this is a toy example, it mirrors the flow you’ll eventually use with a real Atlas robot: image → language prompt → VLA plan → motor commands.

Pitfalls to Watch Out For

Latency Hell

- What happens: If the inference call takes >200 ms, the robot’s control loop can become unstable.

- Fix: Deploy Gemini on edge GPUs (NVIDIA Jetson, RTX 4090) or use TensorRT‑optimized models.

Safety Blind Spots

- What happens: VLA models may generate a motion that collides with unseen obstacles.

- Fix: Always run a runtime safety checker (e.g., a simple collision‑prediction network) before executing commands.

Domain Drift

- What happens: Training data from factory floors may not transfer to a kitchen environment.

- Fix: Use few‑shot fine‑tuning with on‑site data; DeepMind recommends 50–100 labeled episodes for decent adaptation.

Data Privacy

- What happens: Streaming camera feeds to the cloud can expose proprietary visuals.

- Fix: Encrypt the feed (TLS) and consider on‑prem inference if compliance is a concern.

Best Practices for Building Robot‑AI Services

Modular Architecture

Separate perception, reasoning, and actuation into distinct micro‑services. This lets you swap out a better vision model without touching the motion controller.Versioned Model Registry

Keep a registry (e.g., MLflow) of Gemini model versions. Roll back instantly if a new release introduces regressions.Telemetry‑First Design

Log every sensor frame, inference request, and motor command. Use Grafana dashboards to spot latency spikes or safety violations early.Human‑in‑the‑Loop Testing

Start with a “sandbox mode” where the robot visualizes the plan but doesn’t move. Only after a human approves should the motion be executed.Continuous Integration for Robotics

Use simulation environments like Isaac Gym or Webots in CI pipelines to validate new code before it touches the real Atlas.

Frequently Asked Questions

| Question | Answer |

|---|---|

| Do I need a Google Cloud account to use Gemini? | For production inference, yes—DeepMind’s hosted endpoint runs on GCP. However, a lightweight open‑source version (Gemini‑Lite) is available for edge use. |

| Can I run Gemini on a Jetson Nano? | The full‑scale Gemini model is too heavy, but a pruned version (≈2 GB) can run on Jetson Xavier with TensorRT. |

| Is the partnership limited to Atlas? | No. While Atlas is the flagship demo, the Gemini Robotics foundation model is designed for any robot morphology, including Spot, Stretch, and custom manipulators. |

| What safety standards does this meet? | DeepMind claims compliance with ISO 10218‑1 (industrial robot safety) and ISO 13482 (service robot safety) when combined with Boston’s built‑in safety layers. |

| When will we see commercial products? | Pilot deployments are slated for late 2026 in automotive assembly lines; broader commercial releases may appear in 2027. |

Quick Checklist: Are You Ready to Play with Robot AI?

- Hardware – Access to an Atlas (or any compatible robot) or a high‑fidelity simulator.

- Compute – GPU (≥ RTX 3080) for on‑prem inference or cloud credentials for Gemini API.

- Safety Gear – Emergency stop, collision sensors, and a clear safety perimeter.

- Data Pipeline – Real‑time video streaming (RTSP or gRPC) and secure token handling.

- DevOps – CI/CD for robot code, monitoring dashboards, and rollback procedures.

If you tick all the boxes, you’re primed to start building the next generation of AI‑driven robotics solutions.

Looking Ahead: What Could This Mean for the Web Development World?

- AI‑Powered UI Bots – Imagine a web app that can summon a physical robot to deliver a printed prototype to a client’s desk, all with a single button click.

- Edge‑AI Marketplace – Developers could sell “robotic plugins” (e.g., a coffee‑making routine) that run on Gemini‑powered devices, similar to npm packages today.

- New Standards – Expect emerging specs for Robot‑Web Interaction (RWI), defining how browsers talk to robots over WebSockets or WebRTC.

The Boston‑DeepMind combo is essentially laying the groundwork for a robotic internet—a network where physical agents are first‑class citizens. As a web dev agency, getting comfortable with the underlying AI models now will give you a head start when those standards finally land.

Final Thought

Boston Dynamics has spent a decade perfecting the how of robot movement. DeepMind just handed them the why—a brain that can read, reason, and act like a human. The result? A robot that could one day fetch your coffee, assemble a car, or even star in a Netflix series (hey, we can dream).

If you’re a developer who loves tinkering with cool tech, this partnership is your invitation to start building the future—one line of code, one motion plan, and one witty robot joke at a time.

[IMAGE:Warehouse pilot where Atlas hands a tool to a human worker]

Share this insight

Join the conversation and spark new ideas.